| |

Pointing out that it is crazy for the data protection police to require internet users to hand over their private identity data to all and sundry (all in the name of child protection of course)

|

|

|

| 31st May 2019

|

|

| See article from

indexoncensorship.org |

Elizabeth Denham, Information Commissioner Information Commissioner's Office, Dear Commissioner Denham, Re: The Draft Age Appropriate Design Code for Online Services We write to

you as civil society organisations who work to promote human rights, both offline and online. As such, we are taking a keen interest in the ICO's Age Appropriate Design Code. We are also engaging with the Government in its White Paper on Online Harms,

and note the connection between these initiatives. Whilst we recognise and support the ICO's aims of protecting and upholding children's rights online, we have severe concerns that as currently drafted the Code will not achieve

these objectives. There is a real risk that implementation of the Code will result in widespread age verification across websites, apps and other online services, which will lead to increased data profiling of both children and adults, and restrictions

on their freedom of expression and access to information. The ICO contends that age verification is not a silver bullet for compliance with the Code, but it is difficult to conceive how online service providers could realistically

fulfil the requirement to be age-appropriate without implementing some form of onboarding age verification process. The practical impact of the Code as it stands is that either all users will have to access online services via a sorting age-gate or adult

users will have to access the lowest common denominator version of services with an option to age-gate up. This creates a de facto compulsory requirement for age-verification, which in turn puts in place a de facto restriction for both children and

adults on access to online content. Requiring all adults to verify they are over 18 in order to access everyday online services is a disproportionate response to the aim of protecting children online and violates fundamental

rights. It carries significant risks of tracking, data breach and fraud. It creates digital exclusion for individuals unable to meet requirements to show formal identification documents. Where age-gating also applies to under-18s, this violation and

exclusion is magnified. It will put an onerous burden on small-to-medium enterprises, which will ultimately entrench the market dominance of large tech companies and lessen choice and agency for both children and adults -- this outcome would be the

antithesis of encouraging diversity and innovation. In its response to the June 2018 Call for Views on the Code, the ICO recognised that there are complexities surrounding age verification, yet the draft Code text fails to engage

with any of these. It would be a poor outcome for fundamental rights and a poor message to children about the intrinsic value of these for all if children's safeguarding was to come at the expense of free expression and equal privacy protection for

adults, including adults in vulnerable positions for whom such protections have particular importance. Mass age-gating will not solve the issues the ICO wishes to address with the Code and will instead create further problems. We

urge you to drop this dangerous idea. Yours sincerely, Open Rights Group

Index on Censorship

Article19

Big Brother Watch

Global Partners Digital

|

| |

Merkel's successor gets in a pickle for claiming that YouTubers should be censored before an election

|

|

|

| 31st May 2019

|

|

| See article from thetrumpet.com |

Prior to the European Parliament elections, popular YouTube users in Germany appealed to their followers to boycott the Christian Democratic Union (CDU), the Social Democrats (SPD) and the Alternative fur Deutschland (AfD). Following a miserable

election result, CDU leader Annegret Kramp-Karrenbauer made statements suggesting that in the future, such opinions may be censored. Popular German YouTube star Rezo urged voters to punish the CDU and its coalition partner by not voting for them.

Rezo claimed that the government's inactions on critical issues such as climate change, security and intellectual property rights are destroying our lives and our future. Rezo quickly found the support of 70 other influential YouTube presenters.

But politicians accused him of misrepresenting information and lacking credibility in an effort to discredit him. Nonetheless, his video had nearly 4 million views by Sunday, the day of the election. Experts like Prof. J3crgen Falter of the

University of Mainz believe that Renzo's video swayed the opinions of many undecided voters, especially those under age 30. Kramp-Karrenbauer commented on it during a press conference: What would have happened

in this country if 70 newspapers decided just two days before the election to make the joint appeal: 'Please don't vote for the CDU and SPD ? That would have been a clear case of political bias before the election. What are the

rules that apply to opinions in the analog sphere? And which rules should apply in the digital sphere?

She concluded that these topics will be discussed by the CDU , saying: I'm certain,

they'll play a role in discussions surrounding media policy and democracy in the future.

Many interpreted her statements as an attack on freedom of speech and a call to censor people's opinions online. Ria Schröder, head of the Young

Liberals, wrote: The CDU's understanding of democracy If you are against me, I censor you is incomprehensible! The right of a user on YouTube or other social media to discuss his or her

political view is covered by Germany's Basic Law, which guarantees freedom of speech.

Kramp-Karrenbauer's statements may threaten her chance for the chancellorship. More importantly, they expose the mindset of Germany's political

leadership.

|

| |

Human rights groups and tech companies unite in an open letter condemning GCHQ's Ghost Protocol suggestion to open a backdoor to snoop on 'encrypted' communication apps

|

|

|

| 31st May 2019

|

|

| See article from

privacyinternational.org |

To GCHQ The undersigned organizations, security researchers, and companies write in response to the proposal published by Ian Levy and Crispin Robinson of GCHQ in Lawfare on November 29, 2018 , entitled Principles for a More

Informed Exceptional Access Debate. We are an international coalition of civil society organizations dedicated to protecting civil liberties, human rights, and innovation online; security researchers with expertise in encryption and computer science; and

technology companies and trade associations, all of whom share a commitment to strong encryption and cybersecurity. We welcome Levy and Robinson's invitation for an open discussion, and we support the six principles outlined in the piece. However, we

write to express our shared concerns that this particular proposal poses serious threats to cybersecurity and fundamental human rights including privacy and free expression. The six principles set forth by GCHQ officials are an

important step in the right direction, and highlight the importance of protecting privacy rights, cybersecurity, public confidence, and transparency. We especially appreciate the principles' recognition that governments should not expect unfettered

access to user data, that the trust relationship between service providers and users must be protected, and that transparency is essential. Despite this, the GCHQ piece outlines a proposal for silently adding a law enforcement

participant to a group chat or call. This proposal to add a ghost user would violate important human rights principles, as well as several of the principles outlined in the GCHQ piece. Although the GCHQ officials claim that you don't even have to touch

the encryption to implement their plan, the ghost proposal would pose serious threats to cybersecurity and thereby also threaten fundamental human rights, including privacy and free expression. In particular, as outlined below, the ghost proposal would

create digital security risks by undermining authentication systems, by introducing potential unintentional vulnerabilities, and by creating new risks of abuse or misuse of systems. Importantly, it also would undermine the GCHQ principles on user trust

and transparency set forth in the piece. How the Ghost Proposal Would Work The security in most modern messaging services relies on a technique called public key cryptography. In such systems, each device generates a pair of very

large mathematically related numbers, usually called keys. One of those keys -- the public key -- can be distributed to anyone. The corresponding private key must be kept secure, and not shared with anyone. Generally speaking, a person's public key can

be used by anyone to send an encrypted message that only the recipient's matching private key can unscramble. Within such systems, one of the biggest challenges to securely communicating is authenticating that you have the correct public key for the

person you're contacting. If a bad actor can fool a target into thinking a fake public key actually belongs to the target's intended communicant, it won't matter that the messages are encrypted in the first place because the contents of those encrypted

communications will be accessible to the malicious third party. Encrypted messaging services like iMessage, Signal, and WhatsApp, which are used by well over a billion people around the globe, store everyone's public keys on the

platforms' servers and distribute public keys corresponding to users who begin a new conversation. This is a convenient solution that makes encryption much easier to use. However, it requires every person who uses those messaging applications to trust

the services to deliver the correct, and only the correct, public keys for the communicants of a conversation when asked. The protocols behind different messaging systems vary, and they are complicated. For example, in two-party

communications, such as a reporter communicating with a source, some services provide a way to ensure that a person is communicating only with the intended parties. This authentication mechanism is called a safety number in Signal and a security code in

WhatsApp (we will use the term safety number). They are long strings of numbers that are derived from the public keys of the two parties of the conversation, which can be compared between them -- via some other verifiable communications channel such as a

phone call -- to confirm that the strings match. Because the safety number is per pair of communicators -- more precisely, per pair of keys -- a change in the value means that a key has changed, and that can mean that it's a different party entirely.

People can thus choose to be notified when these safety numbers change, to ensure that they can maintain this level of authentication. Users can also check the safety number before each new communication begins, and thereby guarantee that there has been

no change of keys, and thus no eavesdropper. Systems without a safety number or security code do not provide the user with a method to guarantee that the user is securely communicating only with the individual or group with whom they expect to be

communicating. group with whom they expect to be communicating. Other systems provide security in other ways. For example, iMessage, has a cluster of public keys -- one per device -- that it keeps associated with an account corresponding to an identity

of a real person. When a new device is added to the account, the cluster of keys changes, and each of the user's devices shows a notice that a new device has been added upon noticing that change. The ghost key proposal put forward

by GCHQ would enable a third party to see the plain text of an encrypted conversation without notifying the participants. But to achieve this result, their proposal requires two changes to systems that would seriously undermine user security and trust.

First, it would require service providers to surreptitiously inject a new public key into a conversation in response to a government demand. This would turn a two-way conversation into a group chat where the government is the additional participant, or

add a secret government participant to an existing group chat. Second, in order to ensure the government is added to the conversation in secret, GCHQ's proposal would require messaging apps, service providers, and operating systems to change their

software so that it would 1) change the encryption schemes used, and/or 2) mislead users by suppressing the notifications that routinely appear when a new communicant joins a chat. The Proposal Creates Serious Risks to

Cybersecurity and Human Rights The GCHQ's ghost proposal creates serious threats to digital security: if implemented, it will undermine the authentication process that enables users to verify that they are communicating with the right people, introduce

potential unintentional vulnerabilities, and increase risks that communications systems could be abused or misused. These cybersecurity risks mean that users cannot trust that their communications are secure, as users would no longer be able to trust

that they know who is on the other end of their communications, thereby posing threats to fundamental human rights, including privacy and free expression. Further, systems would be subject to new potential vulnerabilities and risks of abuse.

Integrity and Authentication Concerns As explained above, the ghost proposal requires modifying how authentication works. Like the end-to-end encryption that protects communications while they are in transit, authentication is a

critical aspect of digital security and the integrity of sensitive data. The process of authentication allows users to have confidence that the other users with whom they are communicating are who they say they are. Without reliable methods of

authentication, users cannot know if their communications are secure, no matter how robust the encryption algorithm, because they have no way of knowing who they are communicating with. This is particularly important for users like journalists who need

secure encryption tools to guarantee source protection and be able to do their jobs. Currently the overwhelming majority of users rely on their confidence in reputable providers to perform authentication functions and verify that

the participants in a conversation are the people they think they are, and only those people. The GCHQ's ghost proposal completely undermines this trust relationship and the authentication process. Authentication is still a

difficult challenge for technologists and is currently an active field of research. For example, providing a meaningful and actionable record about user key transitions presents several known open research problems, and key verification itself is an

ongoing subject of user interface research. If, however, security researchers learn that authentication systems can and will be bypassed by third parties like government agencies, such as GCHQ, this will create a strong disincentive for continuing

research in this critical area. Potential for Introducing Unintentional Vulnerabilities Beyond undermining current security tools and the system for authenticating the communicants in an encrypted chat, GCHQ's ghost proposal could

introduce significant additional security threats. There are also outstanding questions about how the proposal would be effectively implemented. The ghost proposal would introduce a security threat to all users of a targeted

encrypted messaging application since the proposed changes could not be exposed only to a single target. In order for providers to be able to suppress notifications when a ghost user is added, messaging applications would need to rewrite the software

that every user relies on. This means that any mistake made in the development of this new function could create an unintentional vulnerability that affects every single user of that application. As security researcher Susan

Landau points out, the ghost proposal involves changing how the encryption keys are negotiated in order to accommodate the silent listener, creating a much more complex protocol--raising the risk of an error. (That actually depends on how the algorithm

works; in the case of iMessage, Apple has not made the code public.) A look back at recent news stories on unintentional vulnerabilities that are discovered in encrypted messaging apps like iMessage, and devices ranging from the iPhone to smartphones

that run Google's Android operating system, lend credence to her concerns. Any such unintentional vulnerability could be exploited by malicious third parties. Possibility of Abuse or Misuse of the Ghost Function The ghost proposal

also introduces an intentional vulnerability. Currently, the providers of end-to-end encrypted messaging applications like WhatsApp and Signal cannot see into their users' chats. By requiring an exceptional access mechanism like the ghost proposal, GCHQ

and U.K. law enforcement officials would require messaging platforms to open the door to surveillance abuses that are not possible today. At a recent conference on encryption policy, Cindy Southworth, the Executive Vice President

at the U.S. National Network to End Domestic Violence (NNEDV), cautioned against introducing an exceptional access mechanism for law enforcement, in part, because of how it could threaten the safety of victims of domestic and gender-based violence.

Specifically, she warned that [w]e know that not only are victims in every profession, offenders are in every profession...How do we keep safe the victims of domestic violence and stalking? Southworth's concern was that abusers could either work for the

entities that could exploit an exceptional access mechanism, or have the technical skills required to hack into the platforms that developed this vulnerability. While companies and some law enforcement and intelligence agencies

would surely implement strict procedures for utilizing this new surveillance function, those internal protections are insufficient. And in some instances, such procedures do not exist at all. In 2016, a U.K. court held that because the rules for how the

security and intelligence agencies collect bulk personal datasets and bulk communications data (under a particular legislative provision) were unknown to the public, those practices were unlawful. As a result of that determination, it asked the agencies

- GCHQ, MI5, and MI6 - to review whether they had unlawfully collected data about Privacy International. The agencies subsequently revealed that they had unlawfully surveilled Privacy International.12 Even where procedures exist

for access to data that is collected under current surveillance authorities, government agencies have not been immune to surveillance abuses and misuses despite the safeguards that may have been in place. For example, a former police officer in the U.S.

discovered that 104 officers in 18 different agencies across the state had accessed her driver's license record 425 times, using the state database as their personal Facebook service.13 Thus, once new vulnerabilities like the ghost protocol are created,

new opportunities for abuse and misuse are created as well.14 Finally, if U.K. officials were to demand that providers rewrite their software to permit the addition of a ghost U.K. law enforcement participant in encrypted chats,

there is no way to prevent other governments from relying on this newly built system. This is of particular concern with regard to repressive regimes and any country with a poor record on protecting human rights. The Proposal

Would Violate the Principle That User Trust Must be Protected The GCHQ proponents of the ghost proposal argue that [a]ny exceptional access solution should not fundamentally change the trust relationship between a service provider and its users. This

means not asking the provider to do something fundamentally different to things they already do to run their business.15 However, the exceptional access mechanism that they describe in the same piece would have exactly the effect they say they wish to

avoid: it would degrade user trust and require a provider to fundamentally change its service. The moment users find out that a software update to their formerly secure end-to-end encrypted messaging application can now allow

secret participants to surveil their conversations, they will lose trust in that service. In fact, we've already seen how likely this outcome is. In 2017, the Guardian published a flawed report in which it incorrectly stated that WhatsApp had a backdoor

that would allow third parties to spy on users' conversations. Naturally, this inspired significant alarm amongst WhatsApp users, and especially users like journalists and activists who engage in particularly sensitive communications. In this case, the

ultimate damage to user trust was mitigated because cryptographers and security organizations quickly understood and disseminated critical deficits in the report,16 and the publisher retracted the story.17 However, if users were

to learn that their encrypted messaging service intentionally built a functionality to allow for third-party surveillance of their communications, that loss of trust would understandably be widespread and permanent. In fact, when President Obama's

encryption working group explored technical options for an exceptional access mechanism, it cited loss of trust as the primary reason not to pursue provider-enabled access to encrypted devices through current update procedures. The working group

explained that this could be dangerous to overall cybersecurity, since its use could call into question the trustworthiness of established software update channels. Individual users aware of the risk of remote access to their devices, could also choose

to turn off software updates, rendering their devices significantly less secure as time passed and vulnerabilities were discovered [but] not patched.18 While the proposal that prompted these observations was targeted at operating system updates, the same

principles concerning loss of trust and the attendant loss of security would apply in the context of the ghost proposal. Any proposal that undermines user trust penalizes the overwhelming majority of technology users while

permitting those few bad actors to shift to readily available products beyond the law's reach. It is a reality that encryption products are available all over the world and cannot be easily constrained by territorial borders.19 Thus, while the few

nefarious actors targeted by the law will still be able to avail themselves of other services, average users -- who may also choose different services -- will disproportionately suffer consequences of degraded security and trust. The Ghost Proposal Would Violate the Principle That Transparency is Essential Although we commend GCHQ officials for initiating this public conversation and publishing their ghost proposal online, if the U.K. were to implement this approach, these activities would be cloaked in secrecy. Although it is unclear which precise legal authorities GCHQ and U.K. law enforcement would rely upon, the Investigatory Powers Act grants U.K. officials the power to impose broad non-disclosure agreements that would prevent service providers from even acknowledging they had received a demand to change their systems, let alone the extent to which they complied. The secrecy that would surround implementation of the ghost proposal would exacerbate the damage to authentication systems and user trust as described above.

Conclusion For these reasons, the undersigned organizations, security researchers, and companies urge GCHQ to abide by the six principles they have announced, abandon the ghost proposal, and avoid any alternate approaches that

would similarly threaten digital security and human rights. We would welcome the opportunity for a continuing dialogue on these important issues. Sincerely, Civil Society Organizations Access Now Big

Brother Watch Blueprint for Free Speech Center for Democracy & Technology Defending Rights and Dissent Electronic Frontier Foundation Engine Freedom of the Press Foundation Government Accountability Project Human Rights Watch International Civil

Liberties Monitoring Group Internet Society Liberty New America's Open Technology Institute Open Rights Group Principled Action in Government Privacy International Reporters Without Borders Restore The Fourth Samuelson-Glushko

Canadian Internet Policy & Public Interest Clinic (CIPPIC) TechFreedom The Tor Project X-Lab Technology Companies and Trade Associations ACT | The App Association Apple Google Microsoft Reform Government Surveillance ( RGS is a

coalition of technology companies) Startpage.com WhatsApp Security and Policy Experts* Steven M. Bellovin, Percy K. and Vida L.W. Hudson Professor of Computer Science; Affiliate faculty, Columbia Law School

Jon Callas, Senior Technology Fellow, ACLU L Jean Camp, Professor of Informatics, School of Informatics, Indiana University Stephen Checkoway, Assistant Professor, Oberlin College Computer Science Department Lorrie Cranor, Carnegie Mellon University

Zakir Durumeric, Assistant Professor, Stanford University Dr. Richard Forno, Senior Lecturer, UMBC, Director, Graduate Cybersecurity Program & Assistant Director, UMBC Center for Cybersecurity Joe Grand, Principal Engineer & Embedded Security Expert,

Grand Idea Studio, Inc. Daniel K. Gillmor, Senior Staff Technologist, ACLU Peter G. Neumann, Chief Scientist, SRI International Computer Science Lab Dr. Christopher Parsons, Senior Research Associate at the Citizen Lab, Munk School of Global Affairs and

Public Policy, University of Toronto Phillip Rogaway, Professor, University of California, Davis Bruce Schneier Adam Shostack, Author, Threat Modeling: Designing for Security Ashkan Soltani, Researcher and Consultant - Former FTC CTO and Whitehouse

Senior Advisor Richard Stallman, President, Free Software Foundation Philip Zimmermann, Delft University of Technology Cybersecurity Group

|

| |

Ofcom does its bit to support state internet censorship suggesting that this is what people want

|

|

|

| 30th May 2019

|

|

| See Online Nation report [pdf] from ofcom.org.uk

|

Ofcom has published a wide ranging report about internet usage in Britain. Of course Ofcom takes teh opportunity to bolster the UK government's push to censor the internet. Ofcom writes: When prompted, 83% of adults expressed

concern about harms to children on the internet. The greatest concern was bullying, abusive behaviour or threats (55%) and there were also high levels of concern about children's exposure to inappropriate content including pornography (49%), violent /

disturbing content (46%) and content promoting self-harm (42%). Four in ten adults (39%) were concerned about children spending too much time on the internet. Many 12 to 15-year-olds said they have experienced potentially harmful

conduct from others on the internet. More than a quarter (28%) said they had had unwelcome friend or follow requests or unwelcome contact, 23% had experienced bullying, abusive behaviour or threats, 20% had been trolled'4 and 19% had experienced someone

pretending to be another person. Fifteen per cent said they had viewed violent or disturbing content. Social media sites, and Facebook in particular, are the most commonly-cited source of online harm for most of the types of

potential harm we asked about. For example, 69% of adults who said they had come across fake news said they had seen it on Facebook. Among 12 to 15-year-olds, Facebook was the most commonly-mentioned source of most of the potentially harmful experiences.

Most adults say they would support more regulation of social media sites (70%), video sharing sites (64%) and instant messenger services (61%). Compared to our 2018 research, support for more online regulation appears to have

strengthened. However, just under half (47%) of adult internet users recognised that websites and social media sites have a careful balance to maintain in terms of supporting free speech, even where some users might find the content offensive

|

| |

|

|

|

| 29th May 2019

|

|

|

The Photographers Fighting Instagram's Censorship of Nude Bodies. By Kelsey Ables See article from

artsy.net |

| |

|

|

|

| 29th May 2019

|

|

|

Facebook Is Already Working Towards Germany's End-to-End Encryption Backdoor Vision See

article from forbes.com |

| |

Facebook censors artist who rather hatefully herself reworks 'Make America Great Again' hats into symbols of hate

|

|

|

| 28th May 2019

|

|

| Thanks to Nick

See article from thehill.com

|

An artist who redesigns President Trump's Make America Great Again (MAGA) hats into recognizable symbols of hate speech says she has been banned from Facebook. Kate Kretz rips apart the iconic red campaign hat and resews it to look like other

symbols, such as a Nazi armband or a Ku Klux Klan hood. It all seems a bit hateful and inflammatory though, sneering at the people who choose to wear the caps. Hopefully the cap wearers will recall that free speech is part of what once made

America great. The artist said Facebook took down an image of the reimagined Nazi paraphernalia for violating community standards. She appealed the decision and labeled another image with text clarifying that the photo was of a piece of

art, but her entire account was later disabled. Kretz said: I understand doing things for the greater good. However, I think artists are a big part of Facebook's content providers, and they owe us a fair hearing.

|

| |

Poland heroically challenges the EU's disgraceful and recently passed internet censorship and copyright law

|

|

|

| 25th

May 2019

|

|

| See article from thefirstnews.com

|

Poland is challenging the EU's copyright directive in the EU Court of Justice (CJEU) on grounds of its threats to freedom of speech on the internet, Foreign Minister Jacek Czaputowicz said on Friday. The complaint especially addresses a mechanism

obliging online services to run preventive checks on user content even without suspicion of copyright infringement. Czaputowicz explained at a press conference in Warsaw: Poland has charged the copyright directive to

the CJEU, because in our opinion it creates a fundamental threat to freedom of speech on the internet. Such censorship is forbidden both by the Polish constitution and EU law. The Charter of Fundamental Rights (of the European Union - PAP) guarantees

freedom of speech.

The directive is to change the way online content is published and monitored. EU members have two years to introduce the new regulations. Against the directive are Poland, Holland, Italy, Finland and Luxembourg.

|

| |

|

|

|

| 25th May 2019

|

|

|

YouTuber creators are having the livelihoods trashed by arbitrary and often trivial claims from copyright holders See

article from theverge.com |

| |

Ireland considers internet porn censorship as implemented by the UK

|

|

|

| 23rd May 2019

|

|

| See article

from thejournal.ie |

Ireland's Justice Minister Charlie Flanagan confirmed that the Irish government will consider a similar system to the UK's so-called porn block law as part of new legislation on online safety. Flanagan said: I would be

very keen that we would engage widely to ensure that Ireland could benefit from what is international best practice here and that is why we are looking at what is happening in other jurisdictions.

The Irish communications minister

Richard Bruton said there are also issues around privacy laws and this has to be carefully dealt with. H said: It would be my view that government through the strategy that we have published, we have a cross-government

committee who is looking at policy development to ensure online safety, and I think that forum is the forum where I believe we will discuss what should be done in that area because I think there is a genuine public concern, it hasn't been the subject of

the Law Reform Commission or other scrutiny of legislation in this area, but it was worthy of consideration, but it does have its difficulties, as the UK indeed has recognised also.

|

| |

|

|

|

| 22nd May 2019

|

|

|

Proposed controversial online age verification checks could increase the risk of identity theft and other cyber crimes, warn security experts See

article from computerweekly.com |

| |

An EFF project to show how people are unfairly censored by social media platforms' absurd enforcement of content rules

|

|

|

| 21st May 2019

|

|

| See press release from

eff.org

See TOSsedOut from eff.org |

Users Without Resources to Fight Back Are Most Affected by Unevenly-Enforced Rules The Electronic Frontier Foundation (EFF) has launched TOSsed Out, a project to highlight the vast spectrum of people silenced by social media

platforms that inconsistently and erroneously apply terms of service (TOS) rules. TOSsed Out will track and publicize the ways in which TOS and other speech moderation rules are unevenly enforced, with little to no transparency,

against a range people for whom the Internet is an irreplaceable forum to express ideas, connect with others, and find support. This includes people on the margins who question authority, criticize the powerful, educate, and call

attention to discrimination. The project is a continuation of work EFF began five years ago when it launched Onlinecensorship.org to collect speech takedown reports from users. Last week the White House launched a tool to report

take downs, following the president's repeated allegations that conservatives are being censored on social media, said Jillian York, EFF Director for International Freedom of Expression. But in reality, commercial content moderation practices negatively

affect all kinds of people with all kinds of political views. Black women get flagged for posting hate speech when they share experiences of racism. Sex educators' content is removed because it was deemed too risqu39. TOSsed Out will show that trying to

censor social media at scale ends up removing far too much legal, protected speech that should be allowed on platforms. EFF conceived TOSsed Out in late 2018 after seeing more takedowns resulting from increased public and

government pressure to deal with objectionable content, as well as the rise in automated tools. While calls for censorship abound, TOSsed Out aims to demonstrate how difficult it is for platforms to get it right. Platform rules--either through automation

or human moderators--unfairly ban many people who don't deserve it and disproportionately impact those with insufficient resources to easily move to other mediums to speak out, express their ideas, and build a community. EFF is

launching TOSsed Out with several examples of TOS enforcement gone wrong, and invites visitors to the site to submit more. In one example, a reverend couldn't initially promote a Black Lives Matter-themed concert on Facebook, eventually discovering that

using the words Black Lives Matter required additional review. Other examples include queer sex education videos being removed and automated filters on Tumblr flagging a law professor's black and white drawings of design patents as adult content.

Political speech is also impacted; one case highlights the removal of a parody account lampooning presidential candidate Beto O'Rourke. The current debates and complaints too often center on people with huge followings getting

kicked off of social media because of their political ideologies. This threatens to miss the bigger problem. TOS enforcement by corporate gatekeepers far more often hits people without the resources and networks to fight back to regain their voice

online, said EFF Policy Analyst Katharine Trendacosta. Platforms over-filter in response to pressure to weed out objectionable content, and a broad range of people at the margins are paying the price. With TOSsed Out, we seek to put pressure on those

platforms to take a closer look at who is being actually hurt by their speech moderation rules, instead of just responding to the headline of the day.

|

| |

Firefox has a research project to integrate with TOR to create a Super Private Browsing mode

|

|

|

| 21st May 2019

|

|

| See article from mozilla-research.forms.fm |

Age verification for porn is pushing internet users into areas of the internet that provide more privacy, security and resistance to censorship. I'd have thought that security services would prefer that internet users to remain in the more open areas

of the internet for easier snooping. So I wonder if it protecting kids from stumbling across porn is worth the increased difficulty in monitoring terrorists and the like? Or perhaps GCHQ can already see through the encrypted internet.

RQ12: Privacy & Security for Firefox Mozilla has an interest in potentially integrating more of Tor into Firefox, for the purposes of providing a Super Private Browsing (SPB) mode for our users.

Tor offers privacy and anonymity on the Web, features which are sorely needed in the modern era of mass surveillance, tracking and fingerprinting. However, enabling a large number of additional users to make use of the Tor network

requires solving for inefficiencies currently present in Tor so as to make the protocol optimal to deploy at scale. Academic research is just getting started with regards to investigating alternative protocol architectures and route selection protocols,

such as Tor-over-QUIC, employing DTLS, and Walking Onions. What alternative protocol architectures and route selection protocols would offer acceptable gains in Tor performance? And would they preserve Tor properties? Is it truly

possible to deploy Tor at scale? And what would the full integration of Tor and Firefox look like? |

| |

|

|

|

| 21st May 2019

|

|

|

Thanks to Facebook, Your Cellphone Company Is Watching You More Closely Than Ever See article from theintercept.com

|

| |

The next monstrosity from our EU lawmakers is to relax net neutrality laws so that large internet corporates can better snoop on and censor the European peoples

|

|

|

| 18th May 2019

|

|

| See article from avn.com

|

The internet technology known as deep packet inspection is currently illegal in Europe, but big telecom companies doing business in the European Union want to change that. They want deep packet inspection permitted as part of the new net neutrality rules

currently under negotiation in the EU, but on Wednesday, a group of 45 privacy and internet freedom advocates and groups published an open letter warning against the change: Dear Vice-President Andrus Ansip, (and others)

We are writing you in the context of the evaluation of Regulation (EU) 2015/2120 and the reform of the BEREC Guidelines on its implementation. Specifically, we are concerned because of the increased use of Deep Packet Inspection (DPI)

technology by providers of internet access services (IAS). DPI is a technology that examines data packets that are transmitted in a given network beyond what would be necessary for the provision IAS by looking at specific content from the part of the

user-defined payload of the transmission. IAS providers are increasingly using DPI technology for the purpose of traffic management and the differentiated pricing of specific applications or services (e.g. zero-rating) as part of

their product design. DPI allows IAS providers to identify and distinguish traffic in their networks in order to identify traffic of specific applications or services for the purpose such as billing them differently throttling or prioritising them over

other traffic. The undersigned would like to recall the concerning practice of examining domain names or the addresses (URLs) of visited websites and other internet resources. The evaluation of these types of data can reveal

sensitive information about a user, such as preferred news publications, interest in specific health conditions, sexual preferences, or religious beliefs. URLs directly identify specific resources on the world wide web (e.g. a specific image, a specific

article in an encyclopedia, a specific segment of a video stream, etc.) and give direct information on the content of a transmission. A mapping of differential pricing products in the EEA conducted in 2018 identified 186 such

products which potentially make use of DPI technology. Among those, several of these products by mobile operators with large market shares are confirmed to rely on DPI because their products offer providers of applications or services the option of

identifying their traffic via criteria such as Domain names, SNI, URLs or DNS snooping. Currently, the BEREC Guidelines3 clearly state that traffic management based on the monitoring of domain names and URLs (as implied by the

phrase transport protocol layer payload) is not reasonable traffic management under the Regulation. However, this clear rule has been mostly ignored by IAS providers in their treatment of traffic. The nature of DPI necessitates

telecom expertise as well as expertise in data protection issues. Yet, we observe a lack of cooperation between national regulatory authorities for electronic communications and regulatory authorities for data protection on this issue, both in the

decisions put forward on these products as well as cooperation on joint opinions on the question in general. For example, some regulators issue justifications of DPI based on the consent of the customer of the IAS provider which crucially ignores the

clear ban of DPI in the BEREC Guidelines and the processing of the data of the other party communicating with the subscriber, which never gave consent. Given the scale and sensitivity of the issue, we urge the Commission and BEREC

to carefully consider the use of DPI technologies and their data protection impact in the ongoing reform of the net neutrality Regulation and the Guidelines. In addition, we recommend to the Commission and BEREC to explore an interpretation of the

proportionality requirement included in Article 3, paragraph 3 of Regulation 2015/2120 in line with the data minimization principle established by the GDPR. Finally, we suggest to mandate the European Data Protection Board to produce guidelines on the

use of DPI by IAS providers. Best regards European Digital Rights, Europe Electronic Frontier Foundation, International Council of European Professional Informatics Societies, Europe Article 19,

International Chaos Computer Club e.V, Germany epicenter.works - for digital rights, Austria Austrian Computer Society (OCG), Austria Bits of Freedom, the Netherlands La Quadrature du Net, France ApTI, Romania Code4Romania, Romania IT-Pol, Denmark Homo

Digitalis, Greece Hermes Center, Italy X-net, Spain Vrijschrift, the Netherlands Dataskydd.net, Sweden Electronic Frontier Norway (EFN), Norway Alternatif Bilisim (Alternative Informatics Association), Turkey Digitalcourage, Germany Fitug e.V., Germany

Digitale Freiheit, Germany Deutsche Vereinigung f3cr Datenschutz e.V. (DVD), Germany Gesellschaft f3cr Informatik e.V. (GI), Germany LOAD e.V. - Verein f3cr liberale Netzpolitik, Germany (And others)

|

| |

International VPNs decline to hook up to Russian censorship machines

|

|

|

| 18th May

2019

|

|

| See article from top10vpn.com

|

In March, the Russian government's internet censor Roskomnadzor contacted 10 leading VPN providers to demand they comply with local censorship laws or risk being blocked. Roskomnadzor equired them to hook up to a dedicated government system that

defines a list of websites required to be blocked to Russian internet users. The VPN providers contacted were ExpressVPN, NordVPN, IPVanish, VPN Unlimited, VyprVPN, HideMyAss!, TorGuard, Hola VPN, OpenVPN, and Kaspersky Secure Connection. The

deadline has now passed and the only VPN company that has agreed to comply with the new requirements is the Russia-based Kaspersky Secure Connection. Most other providers on the list have removed their VPN servers from Russia altogether, so asn ot

to be at risk of being asked to hand over information to Russia about their customers. |

| |

South African government considers reams of new law to protect children from porn

|

|

|

| 17th May 2019

|

|

| See press release [pdf] from

justice.gov.za

See discussion paper [pdf] from justice.gov.za |

The South African Law Reform Commission is debating widespread changes law pertaining to the protection of children. Much of the debate is about serious crimes of child abuse but there is a significant portion devoted to protecting children from legal

adult pornography. The commission writes: SEXUAL OFFENCES: PORNOGRAPHY AND CHILDREN On 16 March 2019 the Commission approved the publication of its discussion paper on sexual offences (pornography and children)

for comment. Five main topics are discussed in this paper, namely:

Access to or exposure of a child to pornography; Creation and distribution of child sexual abuse material; Consensual self-child sexual abuse material (sexting); -

Grooming of a child and other sexual contact crimes associated with or facilitated by pornography or child sexual abuse material; and Investigation, procedure & sentencing.

The Commission invites comment on the discussion paper and the draft Bill which accompanies it. Comment may also be made on related issues of concern which have not been raised in the discussion paper. The closing date for comment is

30 July 2019. The methodology discussed doesn't seem to match well to the real world. The authors seems to hold a lot of stock in the notion that every device can contain some sort of simple porn block app that can render a device unable to access

porn and hence be safe for children. The proposed law suggests penalties should unprotected devices get bought, sold, or used by children. Perhaps someone should invent such an app to help out South Africa. |

| |

World governments get together with tech companies in Paris to step internet censorship. But Trump is unimpressed with the one sided direction that the censorship is going

|

|

|

| 16th May 2019

|

|

| See

article from washingtonpost.com

|

The United States has decided not to support the censorship call by 18 governments and five top American tech firms and declined to endorse a New Zealand-led censorship effort responding to the live-streamed shootings at two Christchurch mosques. White

House officials said free-speech concerns prevented them from formally signing onto the largest campaign to date targeting extremism online. World leaders, including British Prime Minister Theresa May, Canadian Prime Minister Justin Trudeau and

Jordan's King Abdullah II, signed the Christchurch Call, which was unveiled at a gathering in Paris that had been organized by French President Emmanuel Macron and New Zealand Prime Minister Jacinda Ardern. The governments pledged to counter

online extremism, including through new regulation, and to encourage media outlets to apply ethical standards when depicting terrorist events online. But the White House opted against endorsing the effort, and President Trump did not join the

other leaders in Paris. The White House felt the document could present constitutional concerns, officials there said, potentially conflicting with the First Amendment. Indeed Trump has previously threatened social media out of concern that it's biased

against conservatives. Amazon, Facebook, Google, Microsoft and Twitter also signed on to the document, pledging to work more closely with one another and governments to make certain their sites do not become conduits for terrorism. Twitter CEO

Jack Dorsey was among the attendees at the conference. The companies agreed to accelerate research and information sharing with governments in the wake of recent terrorist attacks. They said they'd pursue a nine-point plan of technical remedies

designed to find and combat objectionable content, including instituting more user-reporting systems, more refined automatic detection systems, improved vetting of live-streamed videos and more collective development of organized research and

technologies the industry could build and share. The companies also promised to implement appropriate checks on live-streaming, with the aim of ensuring that videos of violent attacks aren't broadcast widely, in real time, online. To that end,

Facebook this week announced a new one-strike policy, in which users who violate its rules -- such as sharing content from known terrorist groups -- could be prohibited from using its live-streaming tools.

|

| |

Donald Trump sets up an internet page to report examples of politically biased internet censorship

|

|

|

| 16th May 2019

|

|

| See whitehouse.typeform.com |

The US Whitehouse has set up a page on the online form building website, typefac.com. Donald Trump asks to be informed of biased censorship. The form reads: SOCIAL MEDIA PLATFORMS should advance FREEDOM OF SPEECH. Yet

too many Americans have seen their accounts suspended, banned, or fraudulently reported for unclear violations of user policies. No matter your views, if you suspect political bias caused such an action to be taken

against you, share your story with President Trump.

|

| |

National Coalition Against Censorship organises nude nipples event with photographer Spencer Tunick

|

|

|

| 16th May 2019

|

|

| Thanks to Nick

See article from

artnews.com |

To challenge online censorship of art featuring naked bodies or body parts, photographer Spencer Tunick, in collaboration with the National Coalition Against Censorship, will stage a nude art action in New York on June 2. The event will bring together

100 undressed participants at an as-yet-undisclosed location, and Tunick will photograph the scene and create an installation using donated images of male nipples. Artists Andres Serrano, Paul Mpagi Sepuya, and Tunick have given photos of their

own nipples to the cause, as has Bravo TV personality Andy Cohen, Red Hot Chili Peppers drummer Chad Smith, and actor/photographer Adam Goldberg. In addition, the National Coalition Against Censorship has launched a #WeTheNipple campaign through

which Instagram and Facebook users can share their experiences with censorship and advocate for changes to the social media platforms' guidelines related to nudity.

|

| |

House of Lords: Questions about DNS over HTTPS

|

|

|

| 15th May 2019

|

|

| See article from theyworkforyou.com |

At the moment when internet users want to view a page, they specify the page they want in the clear. ISPs can see the page requested and block it if the authorities don't like it. A new internet protocol has been launched that encrypts the specification

of the page requested so that ISPs can't tell what page is being requested, so can't block it. This new DNS Over HTTPS protocol is already available in Firefox which also provides an uncensored and encrypted DNS server. Users simply have to change the

settings in about:config (being careful of the dragons of course) Questions have been

raised in the House of Lords about the impact on the UK's ability to censor the internet. House of Lords, 14th May 2019, Internet Encryption Question Baroness Thornton Shadow Spokesperson (Health)

2:53 pm, 14th May 2019 To ask Her Majesty 's Government what assessment they have made of the deployment of the Internet Engineering Task Force 's new " DNS over HTTPS " protocol and its implications for the blocking

of content by internet service providers and the Internet Watch Foundation ; and what steps they intend to take in response. Lord Ashton of Hyde The Parliamentary Under-Secretary of State for Digital, Culture, Media and Sport

My Lords, DCMS is working together with the National Cyber Security Centre to understand and resolve the implications of DNS over HTTPS , also referred to as DoH, for the blocking of content online. This involves liaising

across government and engaging with industry at all levels, operators, internet service providers, browser providers and pan-industry organisations to understand rollout options and influence the way ahead. The rollout of DoH is a complex commercial and

technical issue revolving around the global nature of the internet. Baroness Thornton Shadow Spokesperson (Health) My Lords, I thank the Minister for that Answer, and I apologise to the House for

this somewhat geeky Question. This Question concerns the danger posed to existing internet safety mechanisms by an encryption protocol that, if implemented, would render useless the family filters in millions of homes and the ability to track down

illegal content by organisations such as the Internet Watch Foundation . Does the Minister agree that there is a fundamental and very concerning lack of accountability when obscure technical groups, peopled largely by the employees of the big internet

companies, take decisions that have major public policy implications with enormous consequences for all of us and the safety of our children? What engagement have the British Government had with the internet companies that are represented on the Internet

Engineering Task Force about this matter? Lord Ashton of Hyde The Parliamentary Under-Secretary of State for Digital, Culture, Media and Sport My Lords, I thank the noble Baroness for discussing this

with me beforehand, which was very welcome. I agree that there may be serious consequences from DoH. The DoH protocol has been defined by the Internet Engineering Task Force . Where I do not agree with the noble Baroness is that this is not an obscure

organisation; it has been the dominant internet technical standards organisation for 30-plus years and has attendants from civil society, academia and the UK Government as well as the industry. The proceedings are available online and are not restricted.

It is important to know that DoH has not been rolled out yet and the picture in it is complex--there are pros to DoH as well as cons. We will continue to be part of these discussions; indeed, there was a meeting last week, convened by the NCSC , with

DCMS and industry stakeholders present. Lord Clement-Jones Liberal Democrat Lords Spokesperson (Digital) My Lords, the noble Baroness has raised a very important issue, and it sounds from the

Minister 's Answer as though the Government are somewhat behind the curve on this. When did Ministers actually get to hear about the new encrypted DoH protocol? Does it not risk blowing a very large hole in the Government's online safety strategy set out

in the White Paper ? Lord Ashton of Hyde The Parliamentary Under-Secretary of State for Digital, Culture, Media and Sport As I said to the noble Baroness, the Government attend the IETF . The

protocol was discussed from October 2017 to October 2018, so it was during that process. As far as the online harms White Paper is concerned, the technology will potentially cause changes in enforcement by online companies, but of course it does not

change the duty of care in any way. We will have to look at the alternatives to some of the most dramatic forms of enforcement, which are DNS blocking. Lord Stevenson of Balmacara Opposition Whip (Lords)

My Lords, if there is obscurity, it is probably in the use of the technology itself and the terminology that we have to use--DoH and the other protocols that have been referred to are complicated. At heart, there are two issues at

stake, are there not? The first is that the intentions of DoH, as the Minister said, are quite helpful in terms of protecting identity, and we do not want to lose that. On the other hand, it makes it difficult, as has been said, to see how the Government

can continue with their current plan. We support the Digital Economy Act approach to age-appropriate design, and we hope that that will not be affected. We also think that the soon to be legislated for--we hope--duty of care on all companies to protect

users of their services will help. I note that the Minister says in his recent letter that there is a requirement on the Secretary of State to carry out a review of the impact and effectiveness of the regulatory framework included in the DEA within the

next 12 to 18 months. Can he confirm that the issue of DoH will be included? Lord Ashton of Hyde The Parliamentary Under-Secretary of State for Digital, Culture, Media and Sport Clearly, DoH is on

the agenda at DCMS and will be included everywhere it is relevant. On the consideration of enforcement--as I said before, it may require changes to potential enforcement mechanisms--we are aware that there are other enforcement mechanisms. It is not true

to say that you cannot block sites; it makes it more difficult, and you have to do it in a different way. The Countess of Mar Deputy Chairman of Committees, Deputy Speaker (Lords) My Lords, for the

uninitiated, can the noble Lord tell us what DoH means --very briefly, please? Lord Ashton of Hyde The Parliamentary Under-Secretary of State for Digital, Culture, Media and Sport It is not possible

to do so very briefly. It means that, when you send a request to a server and you have to work out which server you are going to by finding out the IP address, the message is encrypted so that the intervening servers are not able to look at what is in

the message. It encrypts the message that is sent to the servers. What that means is that, whereas previously every server along the route could see what was in the message, now only the browser will have the ability to look at it, and that will put more

power in the hands of the browsers. Lord West of Spithead Labour My Lords, I thought I understood this subject until the Minister explained it a minute ago. This is a very serious issue. I was

unclear from his answer: is this going to be addressed in the White Paper ? Will the new officer who is being appointed have the ability to look at this issue when the White Paper comes out? Lord Ashton of Hyde The

Parliamentary Under-Secretary of State for Digital, Culture, Media and Sport It is not something that the White Paper per se can look at, because it is not within the purview of the Government. The protocol is designed by the

IETF , which is not a government body; it is a standards body, so to that extent it is not possible. Obviously, however, when it comes to regulating and the powers that the regulator can use, the White Paper is consulting precisely on those matters,

which include DNS blocking, so it can be considered in the consultation. |

| |

European politicians vs Silicon Valley

|

|

|

| 14th May 2019

|

|

| See article from wsws.org |

The German President Frank-Walter Steinmeier opened the re:publica 2019 conference in Berlin last week with a speech about internet censorship. The World Socialist Web Site reported the speech: With cynical references to Germany's

Basic Law and the right to freedom of speech contained within it, Steinmeier called for new censorship measures and appealed to the major technology firms to enforce already existing guidelines more aggressively. He stated, The

upcoming 70th anniversary of the German Basic Law reminds us of a connection that pre-dates online and offline: liberty needs rules--and new liberties need new rules. Furthermore, freedom of opinion brings with it responsibility for opinion. He stressed

that he knew there are already many rules, among which he mentioned the notorious Network Enforcement Law (Netz DG), but it will be necessary to argue over others. He then added, Anyone who creates space for a political discussion

with a platform bears responsibility for democracy, whether they like it or not. Therefore, democratic regulations are required, he continued. Steinmeier said that he felt this is now understood in Silicon Valley. After a lot of words and announcements,

discussion forums, and photogenic appearances with politicians, it is now time for Facebook, Twitter, YouTube and Co. to finally acknowledge their responsibility for democracy, finally put it into practice.

|

| |

Government announces new law to ban watching porn in public places

|

|

|

| 13th May 2019

|

|

| See article from dailymail.co.uk

|

Watching pornography on buses is to be banned, ministers have announced. Bus conductors and the police will be given powers to tackle those who watch sexual material on mobile phones and tablets. Ministers are also drawing up plans for a

national database of claimed harassment incidents. It will record incidents at work and in public places, and is likely to cover wolf-whistling and cat-calling as well as more serious incidents. In addition, the Government is considering whether

to launch a public health campaign warning of the effects of pornography -- modelled on smoking campaigns.

|

| |

The Channel Islands is considering whether to join the UK in the censorship of internet porn

|

|

|

| 13th May 2019

|

|

| See article from jerseyeveningpost.com |

As of 15 July, people in the UK who try to access porn on the internet will be required to verify their age or identity online. The new UK Online Pornography (Commercial Basis) Regulations 2018 law does not affect the Channel Islands but the

States have not ruled out introducing their own regulations. The UK Department for Censorship, Media and Sport said it was working closely with the Crown Dependencies to make the necessary arrangements for the extension of this legislation to the

Channel Islands. A spokeswoman for the States said they were monitoring the situation in the UK to inform our own policy development in this area.

|

| |

Trump to monitor the political censorship of the right by social media

|

|

|

| 13th May 2019

|

|

| 4th May 2019. See

article from washingtonpost.com |

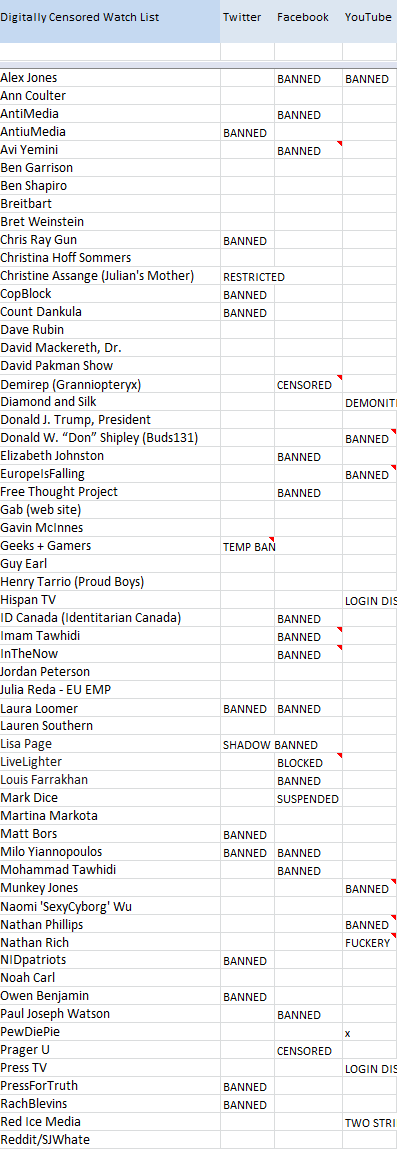

President Trump has threatened to monitor social-media sites for their censorship of American citizens. He was responding to Facebook permanently banning figures and organizations from the political right. Trump tweeted:

I am continuing to monitor the censorship of AMERICAN CITIZENS on social media platforms. This is the United States of America -- and we have what's known as FREEDOM OF SPEECH! We are monitoring and watching, closely!!

On Thursday, Facebook announced it had permanently banned users including Louis Farrakhan, the founder of the Nation of Islam, along with far-right figures Milo Yiannopoulos, Laura Loomer and Alex Jones, the founder of Infowars. The tech

giant removed their accounts, fan pages and affiliated groups on Facebook as well as its photo-sharing service Instagram, claiming that their presence on the social networking sites had become dangerous. For his part, President Trump repeatedly

has accused popular social-networking sites of exhibiting political bias, and threatened to regulate Silicon Valley in response. In a private meeting with Twitter CEO Jack Dorsey last month, Trump repeatedly raised his concerns that the company has

removed some of his followers. On Friday, Trump specifically tweeted he was surprised about Facebook's decision to ban Paul Joseph Watson, a YouTube personality who has served as editor-at-large of Infowars . Update:

Texas bill would allow state to sue social media companies like Facebook and Twitter that censor free speech 13th May 2019. See

article from texastribune.org

A bill before the Texas Senate seeks to prevent social media platforms like Facebook and Twitter from censoring users based on their viewpoints. Supporters say it would protect the free exchange of ideas, but critics say the bill contradicts a federal

law that allows social media platforms to regulate their own content. The measure -- Senate Bill 2373 by state Sen. Bryan Hughes -- would hold social media platforms accountable for restricting users' speech based on personal opinions. Hughes said

the bill applies to social media platforms that advertise themselves as unbiased but still censor users. The Senate State Affairs Committee unanimously approved the bill last week. The Texas Senate approved the bill on April 25 in an 18-12 vote. It now

heads to the House.

|

| |

Responding to fears of an enormous fine from the US authorities, Facebook will set up a privacy oversight committee

|

|

|

| 12th May

2019

|

|

| See

article from computing.co.uk |

Facebook will create a privacy oversight committee as part of its recent agreement with the US Federal Trade Commission (FTC), according to reports. According to Politico, Facebook will appoint a government-approved committee to 'guide' the company on

privacy matters. This committee will also consist of company board members. The plans would also see Facebook chairman and CEO Mark Zuckerberg act as a designated compliance officer, meaning that he would be personally responsible and accountable

for Facebook's privacy policies. Last week, it was reported that Facebook could be slapped with a fine of up to $5 billion over its handling of user data and privacy. The FTC launched the investigation last March, following claims that Facebook

allowed organisations, such as political consultancy Cambridge Analytica, to collect data from millions of users without their consent. |

| |

Fake news and criticism of the authorities to be banned even from private internet chats

|

|

|

|

11th May 2019

|

|

| See article from weeklyblitz.net |

The Committee to Protect Journalists has condemned the Singapore parliament's passage of legislation that will be used to stifle reporting and the dissemination of news, and called for the punitive measure's immediate repeal. The Protection

from Online Falsehoods and Manipulation Act , which was passed yesterday, gives all government ministers broad and arbitrary powers to demand corrections, remove content, and block webpages if they are deemed to be disseminating falsehoods against

the public interest or to undermine public confidence in the government, both on public websites and within chat programs such as WhatsApp, according to news reports . Violations of the law will be punishable with maximum 10-year jail terms and

fines of up to $1 million Singapore dollars (US$735,000), according to those reports. The law was passed after a two-day debate and is expected to come into force in the next few week. Shawn Crispin, CPJ's senior Southeast Asian

representative said: This law will give Singapore's ministers yet another tool to suppress and censor news that does not fit with the People's Action Party-dominated government's authoritarian narrative. Singapore's

online media is already over-regulated and severely censored. The law should be dropped for the sake of press freedom.

Law Minister K. Shanmugam said censorship orders would be made mainly against technology companies that hosted the

objectionable content, and that they would be able to challenge the government's take-down requests,.

|

| |

|

|

|

| 11th May 2019

|

|

|

Social media censorship is a public concern and needs a public solution. By Scott Bicheno See article

from telecoms.com |

| |

Now Facebook is in court for not protecting victims of sex trafficking, no doubt wishing it hadn't supported the removal of the very same legal protection it now needs

|

|

|

| 7th May 2019

|

|

| See article from chron.com

|

Lawyers for Facebook and Instagram have appeared in a Texas courtrooms attempting to dismiss two civil cases that accuse the social media sites of not protecting victims of sex trafficking. The Facebook case involves a Houston woman who in October

said the company's morally bankrupt corporate culture left her prey to a predatory pimp who drew her into sex trafficking as a child. The Instagram case involves a 14-year-old girl from Spring who said she was recruited, groomed and sold in 2018 by a man

she met on the social media site. Of course Facebook is only embroiled in this case because it supported Congress to pass an anti-trafficking amendment in April 2018. Stop Enabling Sex Traffickers Act and Fight Online Sex Trafficking Act,

collectively known as SESTA-FOSTA, this attempts to make it easier to prosecute owners and operators of websites that facilitate sex trafficking. This act removed the legal protection for websites that previously meant they couldn't be held responsible

for the actions of its members. After the Houston suit was filed, a Facebook spokesperson said human trafficking is not permitted on the site and staffers report all instances they're informed about to the National Center for Missing and Exploited

Children. Of course that simply isn't enough any more, and now they have to proactively stop their website from being used for criminal activity. The impossibility of preventing such misuse has led to many websites pulling out of anything that may

be related to people hooking up for sex, lest they are held responsible for something they couldn't possibly prevent. But perhaps Facebook has enough money to pay for lawyers who can argue their way out of such hassles.

The Adult Performers Actors Guild is standing up for sex workers who are tired of being banned from Instagram with no explanation. 7th May 2019. See

article from vice.com

In related news, adult performers are campaigning against being arbitrarily banned from their accounts by Facebook and Instagram. It seems likely that the social media companies are summarily ejecting users detected to have any connection with people

getting together for sex. As explained above, the social media companies are responsible for anything related to sex trafficking happening on their website. They practically aren't able to discern sex trafficking from consensual sex so the only

protection available for internet companies is to ban anyone that might have a connection to sex. This reality is clearly impacting those effected. A group of adult performers is starting to organize against Facebook and Instagram for removing

their accounts without explanation. Around 200 performers and models have included their usernames in a letter to Facebook asking the network to address this issue. Alana Evans, president of the Adult Performers Actors Guild (APAG), a union that

advocates for adult industry professionals' rights, told Vice. There are performers who are being deleted, because they put up a picture of their freshly painted toenails In an April 22 letter to Facebook, the Adult Performers Actors

Guild's legal counsel James Felton wrote: Over the course of the last several months, almost 200 adult performers have had their Instagrams accounts terminated without explanation. In fact, every day, additional

performers reach out to us with their termination stories. In the large majority of instances, her was no nudity shown in the pictures. However, it appears that the accounts were terminated merely because of their status as an adult performer.

Effort to learn the reasons behind the termination have been futile. Performers are asked to send pictures of their names to try to verify that the accounts are actually theirs and not put up by frauds. Emails are sent and there is no

reply.

|

| |

Google's Chrome browser is set to allow users to disable 3rd party tracking cookies

|

|

|

| 7th May 2019

|

|

| See article from

dailymail.co.uk |

Google is set to roll out a dashboard-like function in its Chrome browser to offer users more control in fending off tracking cookies, the Wall Street Journal has reported. While Google's new tools are not expected to significantly curtail its

ability to collect data itself, it would help the company press its sizable advantage over online-advertising rivals, the newspaper said . Google has been working on the cookies plan for at least six years, in stops and starts, but accelerated the

work after news broke last year that personal data of Facebook users was improperly shared with Cambridge Analytica. The company is mostly targeting cookies installed by profit-seeking third parties, separate from the owner of the website a user

is actively visiting, the Journal said. Apple Inc in 2017 stopped majority of tracking cookies on its Safari browser by default and Mozilla Corp's Firefox did the same a year later.

|

| |

The EFF argues that social media companies solving the anti-vax problem by censoring anti-vax information is ineffective

|

|

|

|

4th May 2019

|

|

| See article from baekdal.com

See

article from eff.org by Jillian C York |

The EFF has written an impassioned article about the ineffectiveness of censorship of anti-vax information as a means of convincing people that vaccinations are the right things for their kids. But before presenting the EFF

case, I read a far more interesting article suggesting that the authorities are on totally the wrong track anyway. And that no amount of blocking anti-vax claims. or bombarding people with true information, is going to make any difference. The

article suggests that many anti-vaxers don't actually believe that vaccines cause autism anyway, so censorship of negative info and pushing of positive info won't work because people know that already. Thomas Baekdal explains:

What makes a parent decide not to vaccinate their kids? One reason might be an economic one. In the US (in particular), healthcare comes at a very high cost, and while there are ways to vaccinate kids cheaper (or even for free),

people in the US are very afraid of engaging too much with the healthcare system. So as journalists, we need to focus on that instead, because it's highly likely that many people who make this decision do so because they worry

about their financial future. In other words, not wanting to vaccinate their kids might be an excuse that they cling to because of financial concerns. Perhaps it is the same with denying climate change. Perhaps it is not the belief in

the science of climate change that is the reason for denial. Perhaps it is more that people find the solutions unpalatable. Perhaps they don't want to support climate change measures simply because they don't fancy being forced to become vegetarian and

don't want to be priced off the road by green taxes. Anyway the EFF have been considering the difficulties faced by social media companies trying to convince anti-vaxers by censorship and by force feeding them 'the correct information'. The EFF

writes:

With measles cases on the rise for the first time in decades and anti-vaccine (or anti-vax) memes spreading like wildfire on social media, a number of companies--including Facebook, Pinterest, YouTube, Instagram, and GoFundMe --recently banned

anti-vax posts. But censorship cannot be the only answer to disinformation online. The anti-vax trend is a bigger problem than censorship can solve. And when tech companies ban an entire category of content like this, they have a

history of overcorrecting and censoring accurate, useful speech --or, even worse, reinforcing misinformation with their policies. That's why platforms that adopt categorical bans must follow the Santa Clara Principles on Transparency and Accountability

in Content Moderation to ensure that users are notified when and about why their content has been removed, and that they have the opportunity to appeal. Many intermediaries already act as censors of users' posts, comments, and

accounts , and the rules that govern what users can and cannot say grow more complex with every year. But removing entire categories of speech from a platform does little to solve the underlying problems. Tech companies and online

platforms have other ways to address the rapid spread of disinformation, including addressing the algorithmic megaphone at the heart of the problem and giving users control over their own feeds. Anti-vax information is able to

thrive online in part because it exists in a data void in which available information about vaccines online is limited, non-existent, or deeply problematic. Because the merit of vaccines has long been considered a decided issue, there is little recent

scientific literature or educational material to take on the current mountains of disinformation. Thus, someone searching for recent literature on vaccines will likely find more anti-vax content than empirical medical research supporting vaccines.